One of the tools I have used for backup and recovery is Etcdctl, a command line tool for mananging Etcd. The concept is pretty simple you take a snapshot of the database and save it to a file. However, where it gets complicated is that if you are running a multi-node control plane cluster you have to use that snapshot and restore everyone of the Etcd nodes.

Adventures with Kubernetes

If you've never self-hosted Kubernetes then you may not be aware of the critical pieces of it that keep it running, Etcd being one of them. Etcd is the distributed key value store for the clusters data, without it can't function. Here are some of the lessons I've learned running my own cluster over the years.

In most tutorials for self-hosting Kubernetes they will go with a basic three node setup with one control plane and two workers. This is fine in most cases but if you are looking for HA it won't work, the moment you have to shutdown or reboot that control plane your cluster will become unavailable. These are my tips on setting up an HA cluster and moving from a single control plane to many.

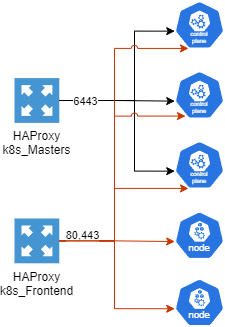

An external load balancer is a great way to make not only your control plane highly available but also your workers. In my setup I use the HAProxy package in pfSense to load balance my control planes and workers behind a virtual IP. Below is a diagram of how I have setup my control planes and nodes. Port 6443 is load balancing the kubernetes API endpoints for the control planes and 80 and 443 are for all nodes.

On each control plane there are kubeconfig files for each of the Kubernetes services that run as Pods in the cluster. These can be found in the

/etc/kubernetes directory and need to be updated (example below) to use the load balancer to contact the kubernetes API endpoints. But the first step before this is

updating the certificate with the IP of the load balancer as a SAN so the kube-api servers won't reject the traffic. An entire walkthrough of how to do this I found

on Scott Lowe's website

apiVersion: v1

clusters:

- cluster:

certificate-authority-data:

server: https://kube.example.com:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: system:kube-controller-manager

name: system:kube-controller-manager@kubernetes

current-context: system:kube-controller-manager@kubernetes

kind: Config

preferences: {}

users:

- name: system:kube-controller-manager

user:

client-certificate-data:

client-key-data:

Unlike a Kubernetes cluster hosted in a public cloud, a self-hosted cluster doesn't have the same storage provider options. Automatically provisioning persistent volumes doesn't work so if you require storage that you don't want tied to a specific node then NFS is probably the best option.

The networking with Kubernetes can sometimes be a little complicated and easy to break. Below are some of my tips from breaking my clusters many times and having to diagnose and repair it as well as things to make your life easier when exposing applications.

CNI stands for Container Network Interface and allows the pods/containers in your cluster to communicate. You should check your CNI version whenever you update the cluster version of Kubernetes to ensure it's on a supported version. I personally use Calico but there are several out there to pick from CNCF CNIs